About

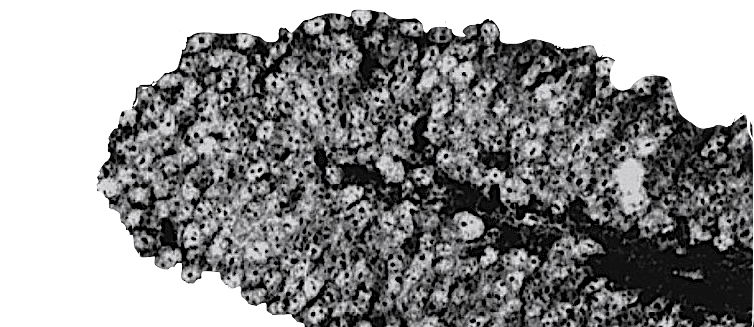

A neuroscientist and researcher by training, I'm passionate about working at the nexus of different disciplines. Currently, I'm continuing the research from my MSc studies with the Institute of Neuroinformatics (Zürich), and developing several other data science projects in sectors that include neuroscience, disaster relief efforts, sentiment extraction from social media, and credit risk analysis. For a look at some of my academic work, check out my Bachelor's thesis proposal from New York University along with the report we published in Nature Neuroscience for that project, and an abstract of my Master's thesis in ETH Zürich and University of Zürich for the Swiss Society of Neuroscience 2019 Annual Meeting.